Shining a Light in a Black Box: Creating Better Machine Learning Programs

Shining a Light in a Black Box: Creating Better Machine Learning Programs

Cynthia Rudin is pushing the field of artificial intelligence.

Rudin writes computer programs that are interpretable. In other words, she has the computer program show its work in addition to spitting out a solution.

Our society is awash in data, and people in all fields are clamoring for better ways to use it. How can we use data to better predict which convicted criminals are most likely to commit crimes again … or which hospitalized neurology patients are most likely to have a seizure … or which manholes in New York City are most likely to explode?

Cynthia Rudin, Ph.D., develops computer programs that use machine learning to answer these questions and others. Rudin, who is a professor in the departments of computer science, electrical and computer engineering, and statistics, collaborates with experts in a wide range of fields to generate better solutions to important problems.

“I like using mathematics and algorithms to help make society more efficient,” says Rudin., “And I like to learn about a lot of different things.”

Rudin is pushing the field of artificial intelligence by creating and applying innovative techniques in machine learning that increase its interpretability and usefulness.

“It’s much easier to create a complicated model that a human doesn’t understand than to create a model that a human can understand. But more interpretability leads to more accuracy, because you know what [the program is] doing.”

Cynthia Rudin

In machine learning, a computer program is “trained” on a large set of data to perform a particular task. If the task is to identify dogs, the dataset is a large number of photographs that do and don't contain dogs. The human operator doesn't give instructions for how to identify dogs, but does provide feedback on the accuracy of the computer program's attempts. The computer program becomes more accurate over time as it incorporates the feedback and refines its strategies. If the program in this example is what's called a “black box” program, it may be successful on the dataset it was trained on, but it won't share its strategies for recognizing dogs. Is it the ears? The long nose? The collar? The loving look in its eyes? All of the above?

At the moment, most machine learning programs are black boxes. Rudin, however, writes computer programs that are interpretable. In other words, she has the computer program show its work in addition to spitting out a solution.

“It's much easier to create a complicated model that a human doesn't understand than to create a model that a human can understand,” she says. “But more interpretability leads to more accuracy of the overall system, because you know what [the program is] doing.”

Black box programs work well enough on problems like identifying dogs or deciding which ads to show consumers on internet browsers, but they aren't as useful for real-world problems with complicated datasets and high-stakes consequences. When a computer program is guiding life-or-death decisions, experts need to be able to double-check the program's work to make sure it's providing reasonable solutions based on sound data. Black boxes make that extremely difficult.

“If you make the model understandable, you can immediately realize if there is something wrong with the model or the dataset it was trained from,” Rudin says. “And it's really easy to detect whether you've made a typographical error [in the input to] an interpretable model.”

Interpretable models also allow the users to evaluate the model and bring their human judgment to bear on its value. For example, a computer program currently used in the criminal justice system to predict a criminal's likelihood of reoffending has come under fire for promoting decisions that appear to be racist. But because it's a black box program, it's difficult for users to evaluate whether that's true. “Fairness is much easier to assess when you know what the model is doing,” Rudin says.

In her most recent project, Rudin has teamed up with Catherine Brinson, Ph.D., to improve the way new materials are designed. Brinson is the Sharon C. and Harold L. Yoh, III, Distinguished Professor and the Donald Alstadt Chair of the Thomas Lord Department of Mechanical Engineering and Materials Science.

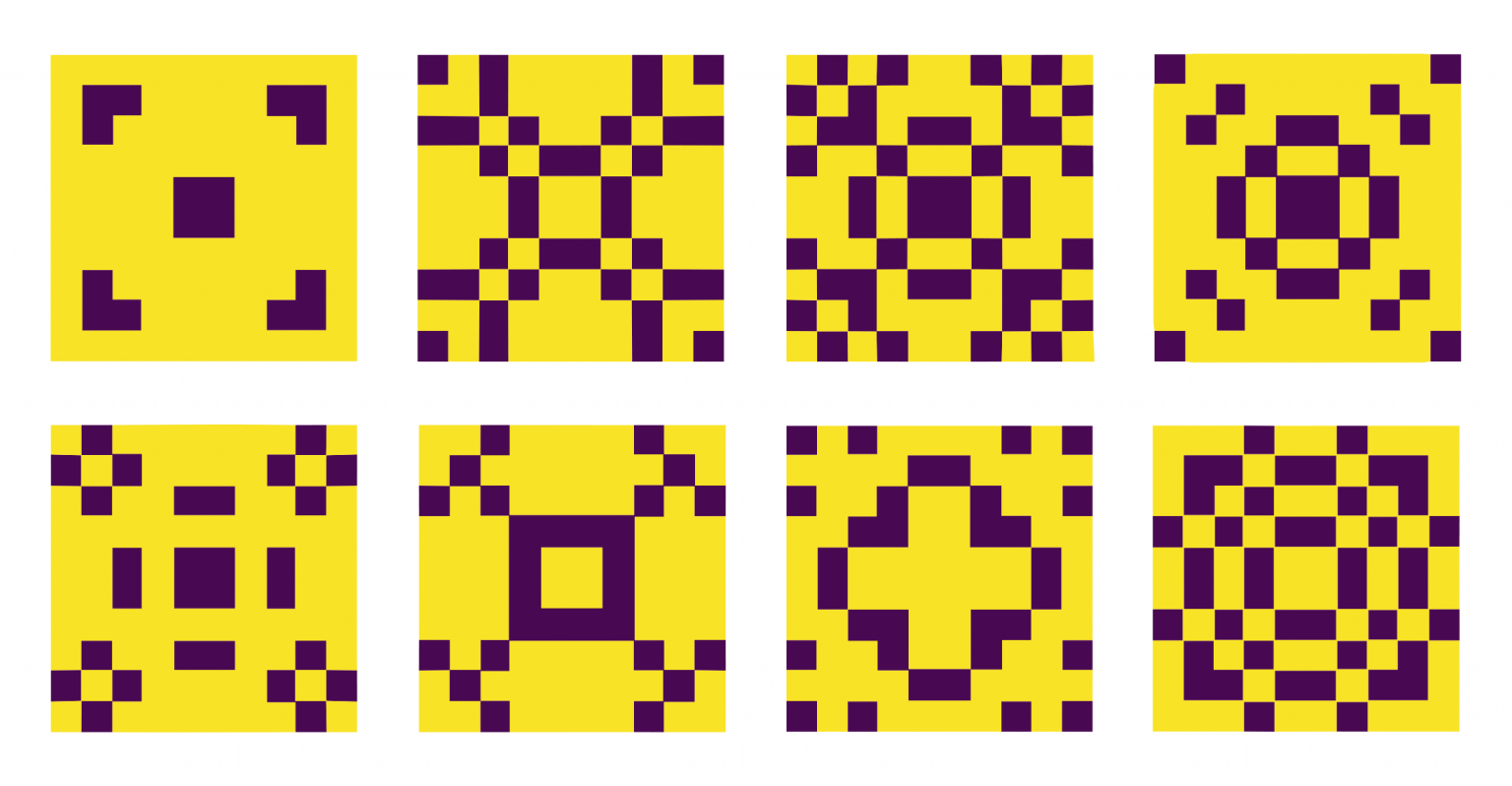

Crystal structure representations of materials studied by Rudin and Brinson.

Photo credit Zhi Chen

Brinson combines polymers and nanoparticles in novel ways to make both nanocomposites and metamaterials, which are materials engineered to behave in unexpected, and potentially very useful, ways. A metamaterial could, for example, stop certain types of mechanical waves from passing through it. A material like this could be an excellent packing material for the Mona Lisa, because the force of an impact or vibrations would not travel through the material. “You can imagine shipping a piece of art surrounded by material that doesn't just dampen a blow like Styrofoam, but actually makes the mechanical impact in the object go to zero,” Brinson says.

While she can certainly list many such possible applications of her metamaterials, she says, “The most important application could be something unexpected. These materials could enable something that was impossible before.” For that reason, Brinson focuses on understanding the fundamentals of how metamaterials work — which geometries lead to the cessation of elastic waves. And machine learning helps with that.

That's because changing small details of the geometries or the choice of materials used, or even the scale at which she produces them have surprising and counterintuitive results. “It's an infinite space of possibility, and you can't make and test everything or even compute all possibilities,” Brinson says. Machine learning can discover patterns that elude the human brain, highlighting ways to change the recipe to get a desired result.

Rudin explains, “Interpretable models can guide the design of these new materials [by helping you] understand what aspects of materials cause or lead to specific properties.”

In addition to their scientific collaboration, Rudin and Brinson are working together to create a program, funded by the National Science Foundation, to offer training at the intersection of artificial intelligence and materials science for Ph.D. students in engineering, math, computer science, or the physical sciences. The program, called Artificial Intelligence for Understanding and Designing Materials (aiM), welcomed its first group of students in 2021.

Rudin and Brinson both point to Duke's culture of interdisciplinary collaboration as an important underpinning of their work together.

“I've never been anywhere like Duke,” Rudin says. “It's a climate where people aren't against each other; they are working for each other and with each other. That's why I came here.”

The future of Duke Science and Technology begins with you

Duke Science and Technology is one of Duke’s biggest priorities. Your investment in our researchers, our students and our work will have exponential impact on society and our world.